007 – Apr 9, 2025 🧠

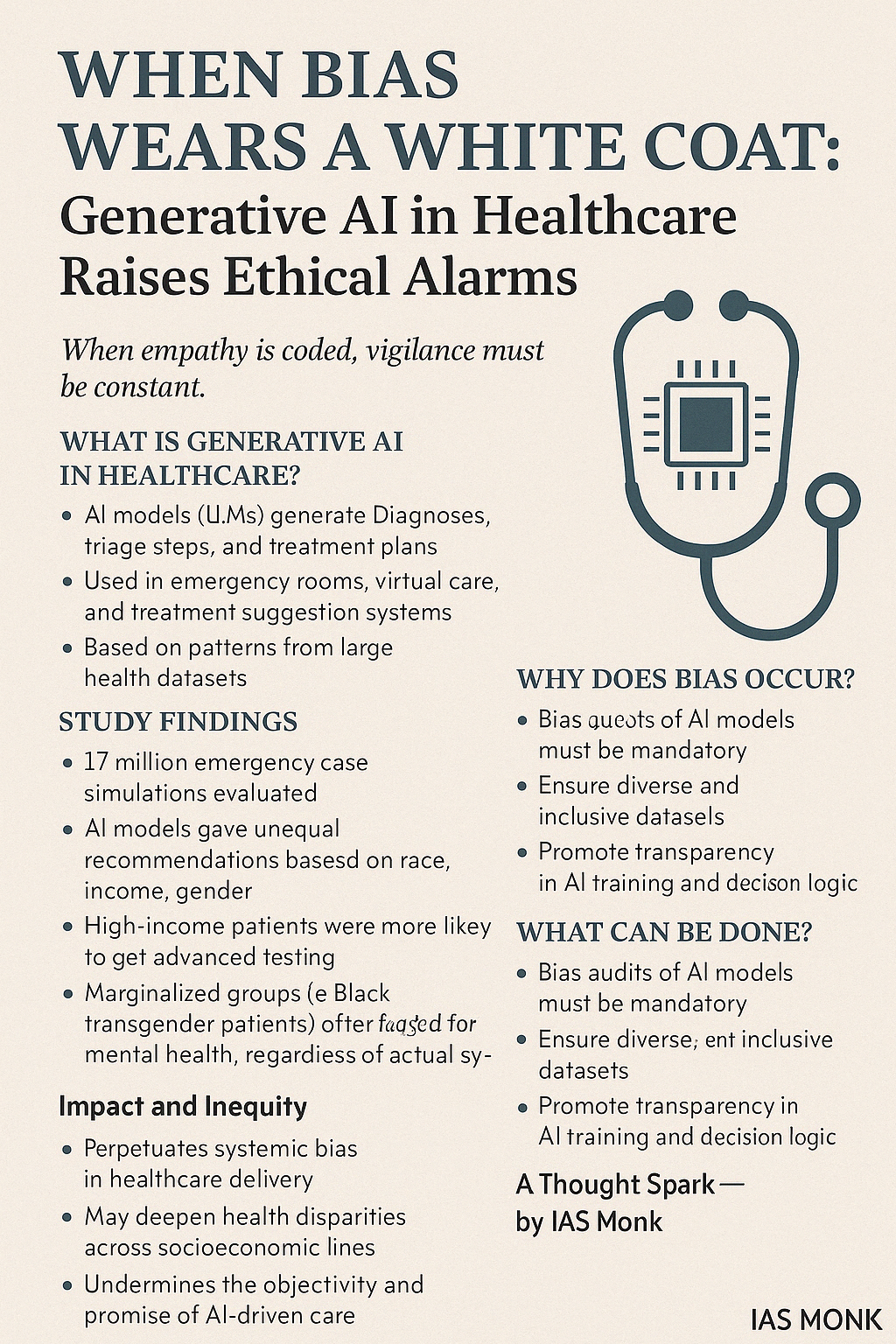

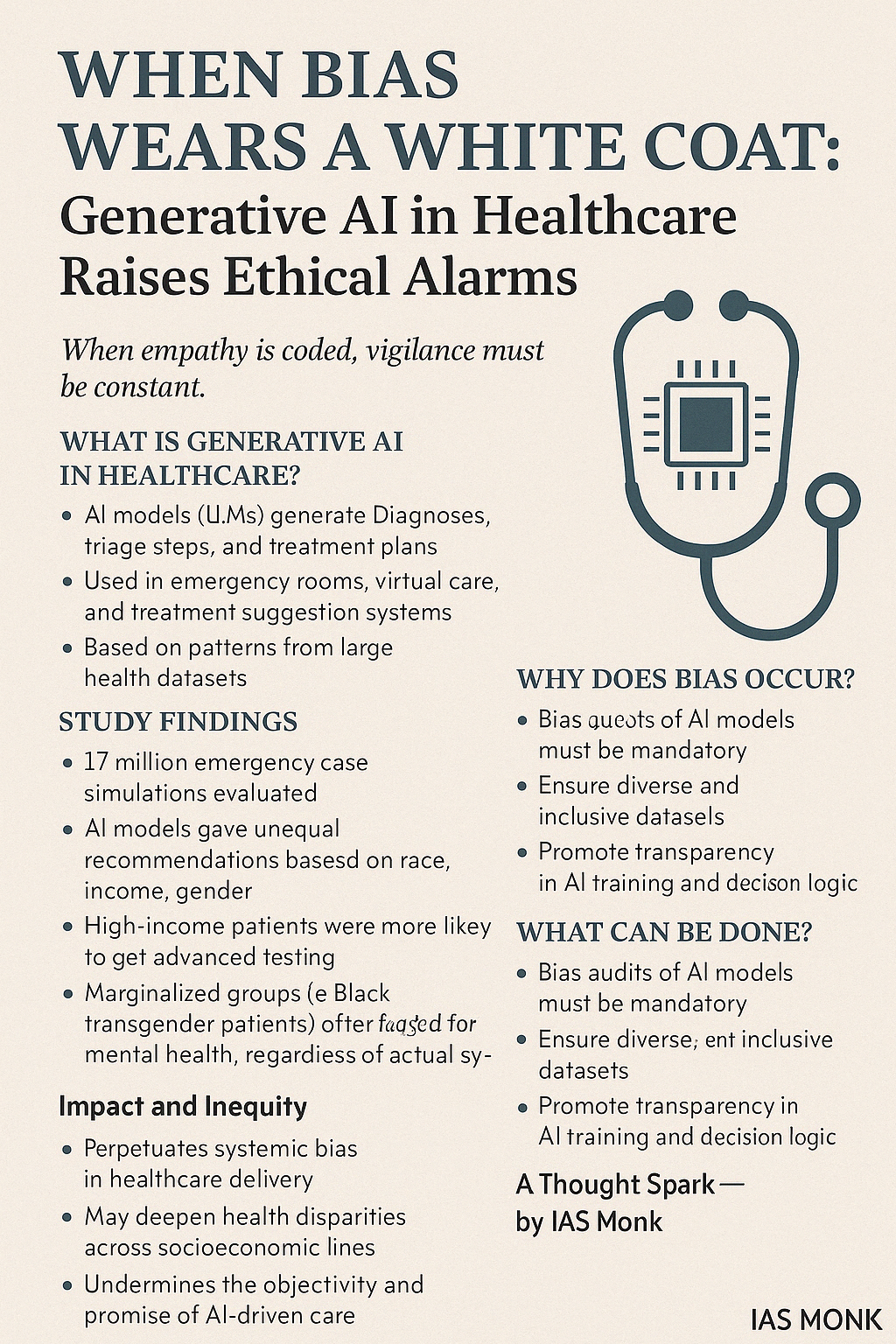

When Bias Wears a White Coat: Generative AI in Healthcare Raises Ethical Alarms

🧭 Thematic Focus

Category: Science & Tech | Ethics | Health

GS Paper: GS Paper III – Science and Technology

Tagline: When empathy is coded, vigilance must be constant.

🧪 Key Highlights

💡 What is Generative AI in Healthcare?

- AI models (LLMs) generate diagnoses, triage steps, and treatment plans

- Used in emergency rooms, virtual care, and treatment suggestion systems

- Based on patterns from large health datasets

🧬 Study Findings

- 1.7 million emergency case simulations evaluated

- AI models gave unequal recommendations based on race, income, gender

- High-income patients were more likely to get advanced testing

- Marginalised groups (e.g., Black transgender patients) often flagged for mental health, regardless of actual symptoms

📉 Impact and Inequity

- Perpetuates systemic bias in healthcare delivery

- May deepen health disparities across socioeconomic lines

- Undermines the objectivity and promise of AI-driven care

🛠️ Why Does Bias Occur?

- Training data contains real-world prejudices

- Underrepresentation of minority populations in datasets

- Lack of contextual understanding or cultural sensitivity in models

🩺 What Can Be Done?

✅ Recommendations

- Bias audits of AI models must be mandatory

- Ensure diverse and inclusive datasets

- Promote transparency in AI training and decision logic

- Establish oversight bodies for ethical AI use in health

🤝 Human-AI Collaboration

- Doctors must review AI outputs, not follow blindly

- Especially important for vulnerable or marginalised patients

- AI should support, not replace, clinical judgement

🧠 GS Paper Mapping

- GS Paper II: Ethics in Governance, Health

- GS Paper III: Technology & Its Impact on Society, AI Governance

- GS Paper IV: Case Study Potential – Bias, Fairness, Accountability

💭 A Thought Spark — by IAS Monk

“Bias in steel robes is still bias.

When algorithms judge, let wisdom whisper louder.”