004. Weights Unleashed – OpenAI’s Return to Open Models

Science & Tech, Artificial Intelligence, Data Ethics, Innovation Strategy

By IAS Monk / April 2, 2025

After years of closed-source innovation, OpenAI is returning to the open frontier.

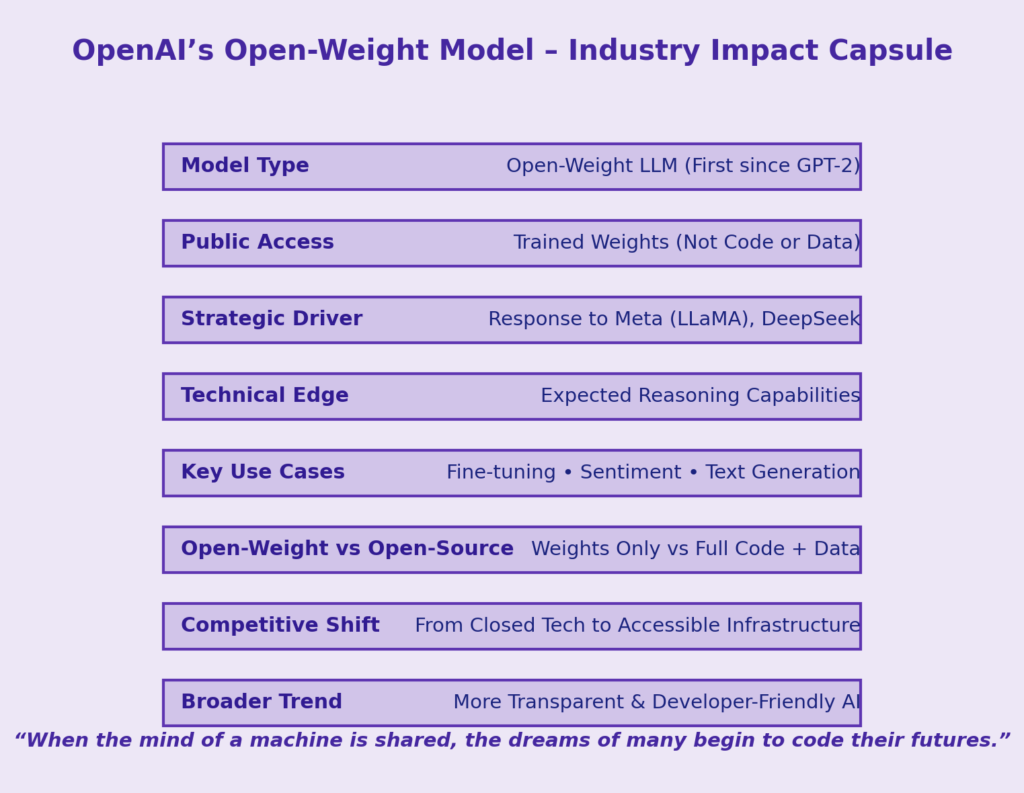

In response to rising competition and developer demand, OpenAI is preparing to launch a new open-weight language model—its first since GPT-2.

This move places the company back in dialogue with a growing community of open-access AI pioneers, such as Meta’s LLaMA and China’s DeepSeek.

🧠 What Are Weights in AI?

- In LLMs, weights are internal values learned during training

- They shape how the model predicts words, solves problems, and understands language

- The more accurate the weights, the more capable the model

🔓 What Are Open-Weight Models?

- Publicly available trained weights (but not always code or data)

- Anyone can download, run, or fine-tune the model

- Useful for custom AI tools, NLP tasks, sentiment analysis, etc.

🔬 Open Weight ≠ Open Source

| Feature | Open-Weight Model | Open-Source Model |

|---|---|---|

| Trained Weights | ✅ Public | ✅ Public |

| Source Code | ❌ Not Public | ✅ Public |

| Training Data | ❌ Not Shared | ✅ May Be Shared |

| Customization | Limited | Full Access |

🔄 Why This Shift?

- OpenAI faces pressure from:

➤ Meta’s LLaMA: Over 1B downloads

➤ Mistral, DeepSeek, Falcon and other open-weight alternatives - Aims to regain developer trust and flexibility

📡 Implications for the AI Ecosystem

- Democratizes access to high-quality LLMs

- Boosts AI-powered startups, research, and education

- Raises questions around security, misuse, and control

- Signals a broader trend toward transparent innovation

📚 Relevance for UPSC

- GS3: Science & Tech – AI Ethics, Data Governance

- GS2: Public Access to Technology, Global Tech Competition

- Essay: “Openness is not just a feature—it’s a philosophy of sharing power.”

✨ Closing Whisper

“When the mind of a machine is shared, the dreams of many begin to code their futures.”

🔥 A Thought Spark – by IAS Monk

In every model weight lies a memory of language—compressed, calculated, learned.

When that weight is made public, it is not just technology being shared—it is potential, freedom, and a silent invitation to innovate.