🌑Knowledge Drop – 017:India’s New AI Governance Guidelines: Building Trust, Safety & Innovation for an AI-Driven Future| Prelims MCQs & High Quality Mains Essay

India’s New AI Governance Guidelines: Building Trust, Safety & Innovation for an AI-Driven Future

Highlights Today — PETAL 017

Date: November 14, 2025

Syllabus: GS2 – Governance, Policy, Emerging Technologies (AI)

🤖 Thematic Focus:

AI Governance • Responsible Innovation • Digital Regulation • Institutional Framework

🔮 Intro Whisper

As intelligence shifts from human minds to machine circuits, the question is no longer whether we will use AI — but how we will govern it wisely.

✨ KEY HIGHLIGHTS

1. India Releases Comprehensive AI Governance Guidelines

- MeitY has published a balanced, innovation-friendly governance framework for Artificial Intelligence.

- Objective: Advance AI progress while minimising risks to individuals, society, and national interests.

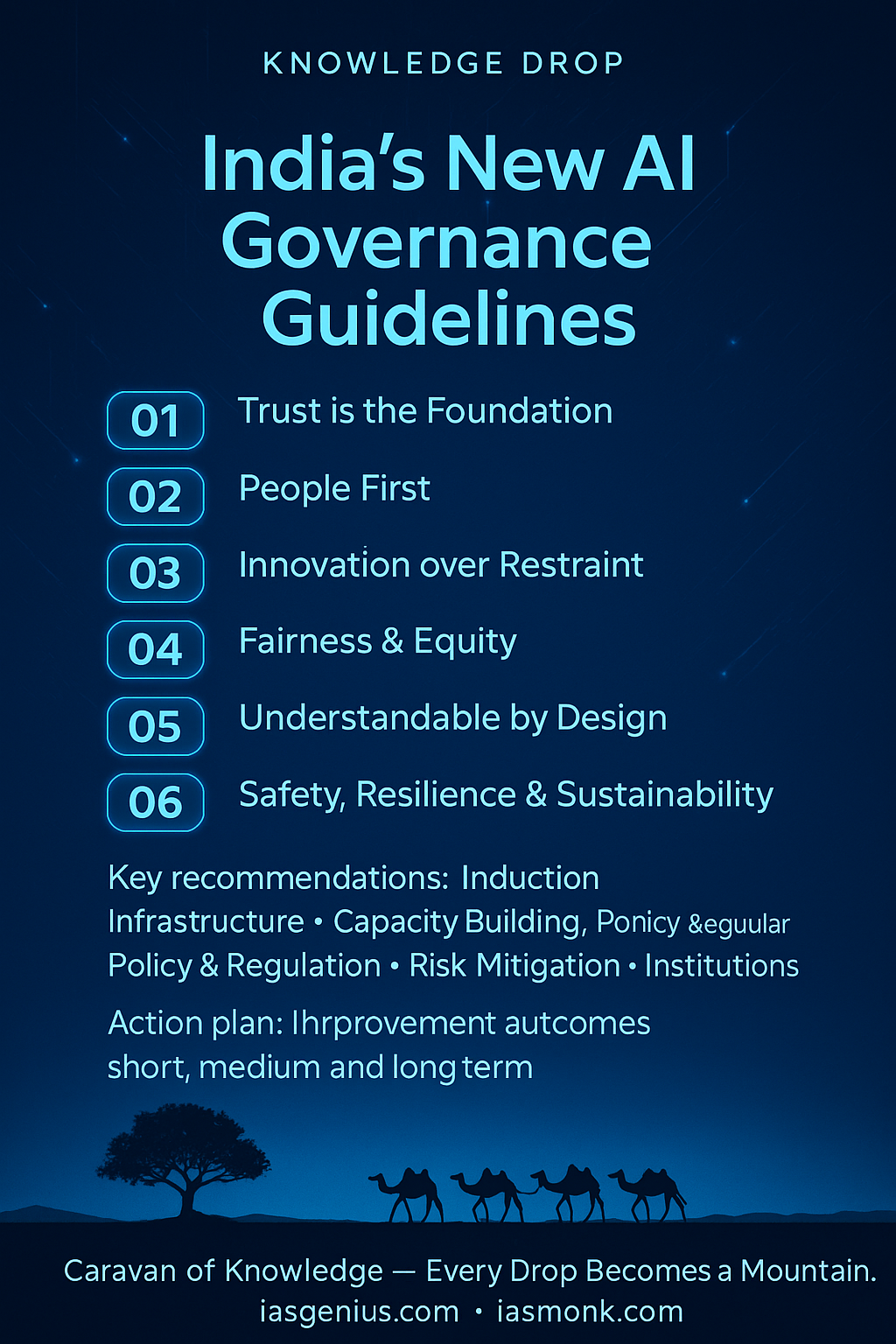

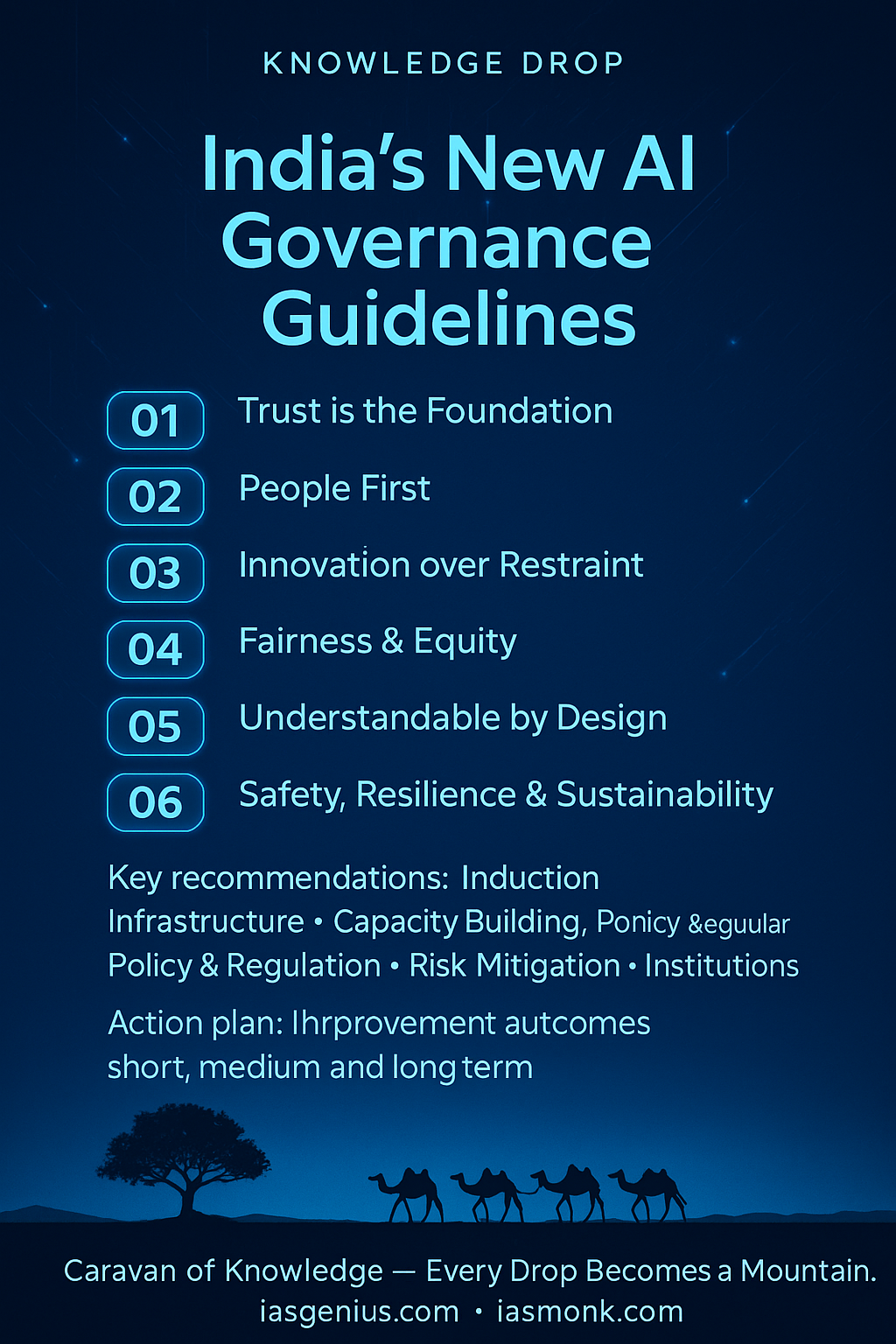

2. Seven Guiding Principles (UPSC Must-Learn)

- Trust is the Foundation – Without trust, adoption stagnates.

- People First – Human oversight, empowerment, dignity.

- Innovation Over Restraint – Encourage safe innovation rather than excessive caution.

- Fairness & Equity – Prevent discrimination; promote inclusive development.

- Accountability – Clear responsibilities; enforceable rules.

- Understandable by Design – Explainable AI; transparency for users & regulators.

- Safety, Resilience & Sustainability – AI systems must withstand shocks and remain sustainable.

3. Six Core Pillars of Governance (High-Value for Mains)

Infrastructure – Expand access to compute, data, DPI, and AI safety tools.

Capacity Building – Skilling, awareness, public literacy on AI benefits & risks.

Policy & Regulation – Agile, flexible, innovation-friendly frameworks.

Risk Mitigation – India-specific models reflecting real-world harm.

Accountability – Graded liability based on function, risk, and due diligence.

Institutions – Whole-of-government model integrating ministries, regulators, standards bodies.

4. Institutional Architecture Proposed

- AI Governance Group (High-level apex body)

- Government Ministries & Agencies: MeitY, MEA, MHA, DoT, etc.

- Sectoral Regulators: RBI, SEBI, TRAI, CCI, IRDAI

- Advisory Bodies: NITI Aayog, Office of PSA, Scientific Advisory Groups

- Standards Bodies: BIS, TEC

This ensures horizontal and vertical coordination across India’s regulatory ecosystem.

5. Action Plan: Short, Medium & Long-Term Roadmap

🔹 Short-Term Priorities

- Establish governance institutions

- Create India-specific AI risk frameworks

- Develop liability regimes

- Launch awareness programmes

- Expand access to compute infrastructure

- Increase access to AI safety tools

🔹 Medium-Term Priorities

- Publish common standards

- Amend laws & regulations

- Operationalise AI incident systems

- Pilot regulatory sandboxes

- Integrate AI with Digital Public Infrastructure (DPI)

🔹 Long-Term Priorities

- Capacity building and continuous engagements

- Governance frameworks to sustain digital ecosystem

- Draft new AI laws based on emerging risks & capabilities

GS Paper Mapping

GS2: Governance, Digital Policies, Role of Government in Emerging Tech, Accountability & Regulation

GS3: Cybersecurity, Digital Economy, Innovation Ecosystems

GS4: AI Ethics — Fairness, Transparency, Accountability

🧭 Closing Thought

To govern AI is to govern power itself —

not to restrict it,

but to guide it with wisdom, equity, and human dignity.

— IAS Monk

Target IAS-26: Daily MCQs :

📌 Prelims Practice MCQs

Topic: India’s Largest Geothermal Energy Pilot (Araku Valley) SET-1

MCQ 1 TYPE 1 — How Many Statements Are Correct?

Consider the following statements regarding India’s AI Governance Guidelines:

1)The guidelines prioritise people-centric and human-oversight approaches.

2)“Innovation over restraint” is one of the core principles of the framework.

3)The guidelines propose a single unified regulator for all AI-related matters.

4)Safety, resilience, and environmental sustainability are recognised as essential considerations for AI systems.

How many of the above statements are correct?

A) Only two

B) Only three

C) All four

D) Only one

🌀 Didn’t get it? Click here (▸) for the Correct Answer & Explanation.

🟩 Correct Answer: B) Only three

🧠 Explanation:

1)✅ True – “People First” is one of the seven principles.

2)✅ True – The guidelines explicitly encourage responsible innovation.

3)❌ False – A multi-institutional “whole-of-government” framework is proposed, not a single regulator.

4)✅ True – Safety, resilience, and sustainability are key guiding principles.

MCQ 2 TYPE 2 — Two-Statement Type

Consider the following statements:

1)India’s AI Governance Guidelines adopt a graded liability system based on risk and due diligence.

2)The guidelines mandate immediate enactment of a comprehensive standalone AI law.

Which of the above statements is/are correct?

A) Only 1 is correct

B) Only 2 is correct

C) Both are correct

D) Neither is correct

🌀 Didn’t get it? Click here (▸) for the Correct Answer & Explanation.

🟩 Correct Answer: A) Only 1 is correct

🧠 Explanation:

1)✅ True – Liability varies by function, risk level, and due diligence.

2)❌ False – The guidelines do not mandate a standalone AI law; they propose phased amendments and long-term legislative evolution.

MCQ 3 TYPE 3 — Code-Based Statement Selection

With reference to the Action Plan under India’s AI Governance Guidelines, consider the following statements:

1)Short-term actions include setting up governance institutions and developing India-specific AI risk frameworks.

2)Medium-term actions include publishing common standards and operationalising AI incident systems.

3)Long-term actions include drafting new AI laws based solely on global developments without considering Indian risks.

Which of the above statements is/are correct?

A) 1 and 2 only

B) 2 and 3 only

C) 1 and 3 only

D) 1, 2 and 3

🌀 Didn’t get it? Click here (▸) for the Correct Answer & Explanation.

🟩 Correct Answer: A) 1 and 2 only

🧠 Explanation:

1)✅ True – These are key short-term priorities.

2)✅ True – Standards, AMENDMENTS, and incident systems are medium-term goals.

3)❌ False – Long-term actions involve drafting laws based on Indian risks and emerging capabilities, not global copying.

MCQ 4 TYPE 4 — Direct Factual Question

Which of the following bodies is proposed to serve as the apex coordinating institution under India’s AI Governance Guidelines?

A) NITI Aayog

B) AI Governance Group

C) Office of the PSA

D) TRAI

🌀 Didn’t get it? Click here (▸) for the Correct Answer & Explanation.

🟩 Correct Answer: B) AI Governance Group

🧠 Explanation:

The guidelines propose an apex-level AI Governance Group coordinating ministries, regulators, and standards bodies.

MCQ 5 TYPE 5 — UPSC 2025 Linkage Reasoning Format (I, II, III)

Consider the following statements:

Statement I:

India’s AI Governance Guidelines emphasise balancing innovation with safety to prevent over-regulation.

Statement II:

The principles include “Innovation over restraint,” encouraging growth while managing risks.

Statement III:

The guidelines prohibit the use of regulatory sandboxes as they may slow down innovation.

Which one of the following is correct in respect of the above statements?

A) Both Statement II and Statement III are correct and both of them explain Statement I

B) Both Statement II and Statement III are correct but only one of them explains Statement I

C) Only one of the Statements II and III is correct and that explains Statement I

D) Neither Statement II nor Statement III is correct

🌀 Didn’t get it? Click here (▸) for the Correct Answer & Explanation.

🟩 Correct Answer: C

🧠 Explanation:

Statement II: ✅ True – It directly explains the balanced approach in Statement I.

Statement III: ❌ False – Guidelines encourage regulatory sandboxes to test AI safely.

Thus, only Statement II explains Statement I.

High Quality Mains Essay For Practice : Essay 1:

Word Limit 1000-1200

India’s New AI Governance Guidelines: Creating a Future-Ready, Human-Centric Digital State

A Full-Length Essay (~1200 words)

GS2 – Governance, Emerging Technologies, Regulation, Accountability

Introduction — Governing Intelligence in the Age of Machines

Human civilisation has governed empires, markets, armies, and resources — but never before has it had to govern intelligence itself. Artificial Intelligence (AI) today influences economies, democracies, public services, creativity, cybersecurity, and national security. The challenge is unprecedented: how to unlock the transformative power of AI while preventing harm to individuals and society.

Against this backdrop, the Ministry of Electronics and Information Technology (MeitY) released the India AI Governance Guidelines (2025) — a landmark, forward-looking framework that seeks to create trustworthy, responsible, and innovation-friendly AI ecosystems. Unlike many global models that lean toward either extreme restraint or aggressive deregulation, India adopts a balanced, agile, and context-driven model grounded in India’s socio-economic realities, digital infrastructure, and constitutional values.

These guidelines represent not just a regulatory strategy but a new social contract between humans and intelligent systems.

A Vision Shaped for India — Why AI Governance Matters Now

India is entering a decisive decade:

- Digital Public Infrastructure (DPI) such as Aadhaar, UPI, Digilocker, and FASTag forms the backbone of public delivery.

- AI adoption is accelerating across agriculture, health, finance, judiciary, urban governance, and national security.

- Startups and researchers are creating indigenous LLMs, agentic systems, and AI tools for the next billion users.

- Risks such as deepfakes, misinformation, privacy violations, bias, job disruption, and cyber threats have intensified.

In this landscape, India cannot afford a governance vacuum. At the same time, excessive restrictions could stifle innovation, slow economic growth, and disadvantage Indian startups.

Thus the guidelines attempt a delicate balance: “mitigate risks without killing innovation.”

This places India in a unique global position — neither Euro-style over-regulation nor Silicon Valley-style techno-libertarianism, but a middle path anchored in trust, transparency, and inclusion.

The Seven Guiding Principles — The Ethical Foundation

The guidelines articulate seven principles that apply across sectors. These principles are not ornamental but serve as operational ethics for AI design, deployment, and governance:

1. Trust is the Foundation

Without trust, users reject technology, markets stagnate, and AI adoption collapses.

Trust is built through transparency, accountability, and safety.

2. People First

AI must empower—not replace—humans.

Human oversight, dignity, and agency are central.

3. Innovation over Restraint

Caution should not become paralysis.

India supports progressive regulation that nurtures innovation while preventing harm.

4. Fairness & Equity

AI must not amplify societal inequalities.

This includes eliminating algorithmic bias, ensuring access to benefits, and promoting inclusive datasets.

5. Accountability

Clear responsibilities must be assigned for AI actions, failures, incidents, and misuse.

6. Understandable by Design

Explainable AI is essential—users must understand why a system took a decision.

7. Safety, Resilience & Sustainability

AI systems must withstand systemic shocks, cyberattacks, and deliver long-term societal value.

Together, these principles form the ethical DNA of India’s AI governance model.

Six Pillars of Governance — The Policy Architecture

MeitY’s guidelines examine India’s needs through six critical pillars, each addressing a distinct governance dimension.

1. Infrastructure

India must expand access to:

- Compute power

- Data resources

- Cloud infrastructure

- Digital Public Infrastructure (DPI)

- AI safety tools

This reduces dependence on foreign hardware, ensures data sovereignty, and accelerates innovation.

2. Capacity Building

India aims to create an AI-literate society by empowering:

- Students

- Startups

- Public officials

- Industry professionals

- Citizens

Skilling, awareness programmes, and grassroots AI literacy are crucial to prevent misuse and build trust.

3. Policy & Regulation

A balanced, agile regulatory structure is recommended:

- Review existing laws

- Identify gaps in cyber, telecom, privacy, competition, finance, and consumer protection

- Introduce graded, risk-based regulation

- Ensure accountability mechanisms for high-risk AI systems

4. Risk Mitigation

A uniquely Indian AI Risk Assessment Framework will be developed based on real-world harm patterns such as:

- Deepfakes

- Fraud

- Welfare exclusion

- Job displacement

- Cyber-threats

- Bias in public service algorithms

5. Accountability

A graded liability system based on:

- Level of risk

- Nature of function

- Degree of diligence

- Intentionality vs negligence

High-risk systems (healthcare, finance, welfare, law enforcement) require higher diligence and stricter accountability.

6. Institutions

A “whole-of-government” institutional model is proposed:

- AI Governance Group (apex body)

- MeitY, DoT, MHA, MEA

- Sectoral regulators: RBI, SEBI, TRAI, CCI

- Advisory bodies: NITI Aayog, Office of PSA

- Standards bodies: BIS, TEC

This ensures horizontal coordination and vertical scalability across all sectors.

Action Plan: Short, Medium & Long-Term Roadmap

The guidelines provide a phased action plan:

Short-Term Actions

- Set up governance institutions

- Develop India-specific AI risk frameworks

- Create liability regimes

- Launch awareness programmes

- Expand access to infrastructure

- Increase access to AI safety tools

- Adopt voluntary industry commitments

Medium-Term Actions

- Publish common standards

- Amend existing laws to reflect AI-related risks

- Operationalise AI incident reporting systems

- Pilot regulatory sandboxes

- Integrate AI with DPI systems

Long-Term Actions

- Continuous capacity building

- Update and refine governance frameworks

- Draft new AI laws based on emerging capabilities

- Ensure sustainability of the digital ecosystem

This phased design ensures stability, adaptability, and long-term resilience.

Global Relevance — India’s Balancing Model

While the EU focuses on stringent regulation (AI Act) and the US on innovation-first approaches, India offers a third way:

- Human-centric, trust-driven model like the EU

- Innovation-friendly, agile model like the US

- Inclusion and scale focus leveraging India’s DPI

- Global South leadership grounded in affordability, access, and equity

India’s guidelines could become a reference framework for developing nations seeking to adopt AI responsibly without stifling growth.

Challenges Ahead — The Road Is Long

Despite the strong framework, India faces challenges:

1. Capacity & Enforcement Gaps

Regulators must upgrade technical expertise to evaluate AI systems.

2. High Cost of Compute Infrastructure

India needs domestic semiconductor & cloud capacity.

3. Fragmented Sectoral Regulations

Financial, telecom, health, and urban AI systems require alignment.

4. Balancing Innovation & Safety

Over-regulation can kill startups; under-regulation can harm citizens.

5. Public Trust Deficit

Deepfakes, misinformation, and fraud could undermine digital confidence.

6. Skilling the Workforce

Millions need AI-ready skills to remain competitive.

India’s guidelines recognise these challenges and attempt to create enabling conditions for long-term solutions.

Conclusion — Governing the Future With Wisdom

AI is not merely a technology; it is a structural force reshaping society, governance, economy, and ethics. India’s AI Governance Guidelines stand at the intersection of trust and innovation, offering a framework that is futuristic, responsible, and deeply humane.

By prioritising fairness, transparency, accountability, safety, and innovation, India declares that technology must serve people, not the other way around.

If implemented effectively, this framework could help India build an AI ecosystem that is not only globally competitive but also socially just, inclusive, and aligned with constitutional values.

In a world where machines are learning faster than ever, India chooses not fear — but foresight.

Not restraint — but responsible progress.

Not control — but collective wisdom.— IAS Monk

High Quality Mains Essay For Practice : Essay 2

Word Limit 1000-1200

The Light That Watches the Flame: India’s AI Governance Journey

“Every new intelligence we create becomes a mirror in which we must rediscover our own.” — Ursula K. Le Guin

When a civilisation reaches a moment where it can shape intelligence outside itself, it also enters an age of profound ethical responsibility. Artificial Intelligence, that enigmatic glow pulsing in the circuits of our machines, is no longer just a tool; it is a participant in the daily rhythms of society — in decisions, predictions, opportunities, and even the subtle undercurrents of public emotion. The Indian state, sensing the magnitude of this shift, has stepped forward not merely with regulation but with reflection. The release of the India AI Governance Guidelines is the nation’s attempt to hold the mirror steady — not to restrain the future, but to understand it, negotiate with it, and guide it with a steady, human hand.

The deeper truth is that AI has changed the texture of human agency. Once upon a time, decisions were slow, deliberate, fallible, and deeply human. Today, they arrive at machine speed. A loan approval happens before a heartbeat completes its beat. A welfare disbursement can be paused by a silent algorithm. A deepfake can rupture reputations in seconds. And yet, paradoxically, the very same technologies hold the promise of transforming agriculture, medicine, governance, and creativity. What India chooses to regulate, promote, restrain, or encourage today will define the moral architecture of tomorrow’s digital civilisation.

Thus, the guidelines are not only technical. They are moral. They are psychological. They are civilisational.

India’s approach is fascinating because it rises from a democratic soil unlike that of the West or the Far East. We are a civilisation of languages, scripts, alphabets, castes, customs, faiths, and contradictions — a civilisation where diversity is not an adjective but oxygen. For AI to function fairly in such a landscape, governance cannot simply be imported; it must be imagined anew. The guidelines recognise this. They speak in a voice that is at once legal and humane, scientific and compassionate, visionary yet pragmatic.

At their heart lies a simple question: How do we govern intelligence that is not alive, yet influences the living? It is a question without historical precedent. AI is not a king to overthrow, not an industrial machine to regulate, not a social ideology to debate. It is an invisible presence woven through databases, texts, images, decisions, and predictions. Its power is subtle — not loud like an army, but quiet like a river carving mountains over time. India’s guidelines attempt to understand this quiet power, which is precisely why they lean on trust, explainability, accountability, and fairness — not as bureaucratic jargon, but as philosophical anchors.

There is something poetic in the phrase “People First.” In a world dazzled by machine capability, India puts the human at the center, not out of nostalgia but out of realism. Machines can optimise. Machines can recommend. Machines can predict. But machines cannot care. They cannot hold the weight of human dignity, nor comprehend the fragility of human suffering. In a nation where a mismatch in a welfare database can mean a family goes hungry, human oversight is not optional — it is sacred.

The guidelines recognise another paradox: the need for innovation without harm. “Innovation over restraint” is not a call to deregulation but an insistence that fear must not paralyse progress. The history of technology shows that societies that embrace innovation with wisdom rise, and those that over-regulate stagnate. India chooses neither recklessness nor timidity. Instead, it chooses a middle path — a profoundly Indian instinct — to advance responsibly, slowly where needed, boldly where possible.

In the veins of this document runs a philosophy of distributed power. Instead of a single regulator, it visualises a constellation — ministries, regulators, standards bodies, advisory councils — a democratic orchestra rather than a monolithic conductor. This too is philosophically sound; no single body can govern something as vast and evolving as AI. Intelligence is not a river with one tributary — it is a network of streams flowing through finance, telecom, health, education, transport, justice, and national security. The guidelines recognise this pluralism and build governance not as a fortress but as a federation.

What is most striking, perhaps, is the emphasis on explainability. In earlier centuries, priests guarded religious knowledge. Later, bureaucrats guarded administrative knowledge. Today, algorithms guard computational knowledge. But unlike priests or bureaucrats, algorithms do not choose secrecy — they simply are secret by nature, their complexity impenetrable to ordinary human reasoning. To demand that AI be explainable is to demand transparency from the invisible, accountability from the silent, humility from the powerful. It is a radical demand.

The true beauty of India’s guidelines lies not in any single clause but in the worldview it projects: a world where technology grows, but not at the cost of human agency; a world where innovation is welcomed but anchored; a world where intelligence expands but remains accountable; a world where the machine does not overshadow the person; a world where progress does not outpace wisdom.

This worldview is deeply needed at a time when the world trembles before deepfakes, misinformation storms, autonomous decision systems, and geopolitical algorithmic warfare. India’s guidelines, to be sure, are not perfect — no governance framework for a technology evolving this rapidly can ever be final — but they represent an attempt to stand at the edge of the unknown and speak with clarity.

The real test will lie in implementation, enforcement, continuous learning, and iterative adaptation. But if India succeeds — even partially — it will offer the world a new model: a governance philosophy that neither worships technology nor fears it, but understands it as a tool that must be shaped by human values.

In the end, perhaps, AI governance is not about machines at all. It is about us. How we choose to design, deploy, question, challenge, refine, and regulate AI will reveal more about our civilisation than about our technology. The guidelines, in that sense, are a mirror — showing us the values we wish to preserve as we step into a future filled with invisible companions of extraordinary power.

And so the journey begins with a mirror and ends with a compass — a reminder that governance, like intelligence, must always evolve, and that the deepest algorithms are not built in silicon but in human conscience.

“The future is not a place we reach, but a shape we learn to guide.” — IAS Monk